本次实战中,我们以图片为例,演示使用Scrapy框架爬取非文本内容的方法。

在前面两次的Scrapy框架爬虫实战中,已经对基础操作有了较为详细的解释说明,因此本次教程中的基础操作将不再过多赘述。

目标网站:传送门

我们以CrawlSpider为工具进行爬取。

在命令行中创建爬虫:

1 2 3 4 cd zcoolscrapy startproject zcool cd zcoolscrapy genspider -t crawl zcoolSpider https://www.zcool.com.cn/

进行一些常规化的基础设置,后续使用Scrapy框架时可以按照这样的思路直接往下进行。

start.py创建start.py以实现在pycharm内运行Scrapy爬虫

1 2 from scrapy import cmdlinecmdline.execute("scrapy crawl zcoolSpider" .split(" " ))

在settings.py中关闭那个君子协议,然后设置好自己的user-agent

1 2 3 4 5 6 7 8 9 10 11 12 13 14 BOT_NAME = 'zcool' SPIDER_MODULES = ['zcool.spiders' ] NEWSPIDER_MODULE = 'zcool.spiders' ROBOTSTXT_OBEY = False DEFAULT_REQUEST_HEADERS = { 'Accept' : 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8' , 'Accept-Language' : 'en' , 'User-Agent' : '我的user-agent' }

设置一下zcoolSpider.py(就是爬虫文件)里的start_urls,本次实战中我们爬取的是“精选部分”,页面链接在这:传送门

1 2 3 name = 'zcoolSpider' allowed_domains = ['zcool.com.cn' ] start_urls = ['https://www.zcool.com.cn/discover/0!3!0!0!0!!!!1!1!1' ]

不难找到不同页码对应链接的规律:

均为https://www.zcool.com.cn/discover/0!3!0!0!0!!!!1!1!+页码的形式

规则(正则表达式)应该这样写:

1 Rule(LinkExtractor(allow=r'.+0!3!0!0!0!!!!1!1!\d+' ),follow=True )

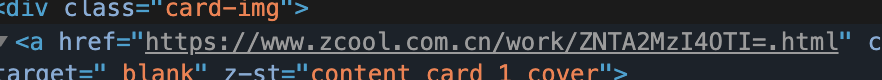

详情页的规则也很明显,均为https://www.zcool.com.cn/work/+一串字母+=.html

规则(正则表达式)应该这样写:

1 Rule(LinkExtractor(allow=r'.+work/.+html' ),follow=False ,callback="parse_detail" )

上面已经写好了rules,使crawlSpider有了自己找到每一个详情页的能力,接下来我们就处理这些详情页。

parse_details由于每个详情页里都有很多张图,所以我们期望把每一页里的图放在同一个文件夹里,然后以那一页的标题为文件名,这样便于我们以后查看。因此,在回调函数中,我们需要获取的内容主要有两个:标题和图片链接

1 2 title = response.xpath("//div[@class='details-contitle-box']/h2/text()" ).getall() title = "" .join(title).strip()

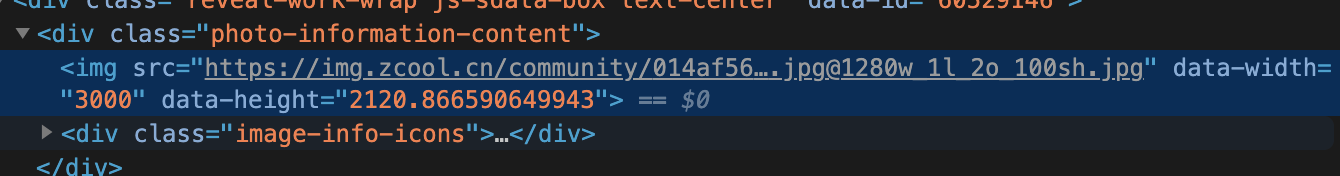

利用div标签的class属性,定位图片的链接

1 image_urls = response.xpath("//div[@class='photo-information-content']/img/@src" ).getall()

ps. 我们可以在插件XPath Helper中验证自己找的xpath路径是否正确,如图:

的确是可以成功获取url

items.py1 2 3 4 5 import scrapyclass ZcoolItem (scrapy.Item): title = scrapy.Field() image_urls = scrapy.Field() images = scrapy.Field()

zcoolSpider.py中调用items.py1 2 3 4 5 6 7 8 from ..items import ZcoolItem... class ZcoolspiderSpider (CrawlSpider ): ... def parse_detail (self, response ): ... item = ZcoolItem(title=title,image_urls=image_urls) return item

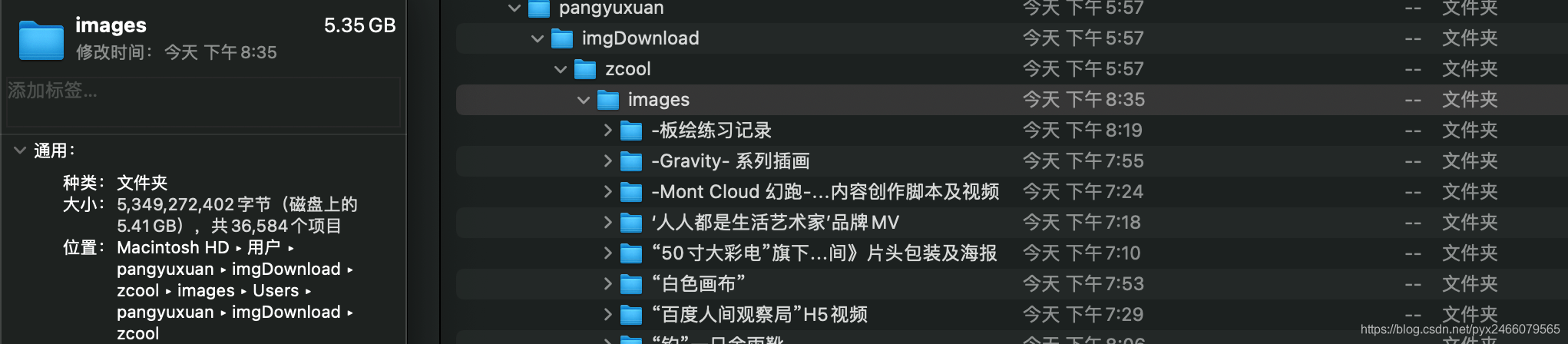

setting.py中打开piplines,并编写文件存储路径1 2 3 4 5 6 7 8 import osIMAGES_STORE = os.path.join(os.path.dirname(os.path.dirname(__file__)),'images' ) ITEM_PIPELINES = { 'zcool.pipelines.ZcoolPipeline' : 300 , }

其中os.path.dirname的作用是获取上层文件夹路径 ,__file__就是只这个文件本身 ,os.path.join则实现了将路径拼接 的作用。

piplines.py1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 from scrapy.pipelines.images import ImagesPipelinefrom zcool import settings import osimport re class ZcoolPipeline (ImagesPipeline ): def get_media_requests (self, item, info ): media_requests = super (ZcoolPipeline, self ).get_media_requests(item,info) for media_request in media_requests: media_request.item = item return media_requests def file_path (self, request, response=None , info=None , *, item=None ): origin_path = super (ZcoolPipeline, self ).file_path(request, response, info) title = request.item['title' ] title = re.sub(r'[\\/:\*\?"<>\|]' ,"" ,title) save_path = os.path.join(settings.IMAGES_STORE,title) image_name = origin_path.replace("full/" ,"" ) return os.path.join(save_path,image_name)

注意到上面的title = re.sub(r'[\\/:\*\?"<>\|]',"",title)一句中,因为我们想用详情页的标题作为文件夹名,但文件夹名中不可以出现这些字符:\ / : * ? " < > |,因此我们要用正则表达式的方法,把标题中的这些字符删除。

至此,我们编写完了本次实战的爬虫,运行可得结果如下:

zcoolSpider.py1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 import scrapyfrom scrapy.linkextractors import LinkExtractorfrom scrapy.spiders import CrawlSpider, Rulefrom ..items import ZcoolItemclass ZcoolspiderSpider (CrawlSpider ): name = 'zcoolSpider' allowed_domains = ['zcool.com.cn' ] start_urls = ['https://www.zcool.com.cn/discover/0!3!0!0!0!!!!1!1!1' ] rules = ( Rule(LinkExtractor(allow=r'.+0!3!0!0!0!!!!1!1!\d+' ),follow=True ), Rule(LinkExtractor(allow=r'.+work/.+html' ),follow=False ,callback="parse_detail" ) ) def parse_detail (self, response ): image_urls = response.xpath("//div[@class='photo-information-content']/img/@src" ).getall() title = response.xpath("//div[@class='details-contitle-box']/h2/text()" ).getall() title = "" .join(title).strip() item = ZcoolItem(title=title,image_urls=image_urls) return item

items.py1 2 3 4 5 import scrapyclass ZcoolItem (scrapy.Item): title = scrapy.Field() image_urls = scrapy.Field() images = scrapy.Field()

piplines.py1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 from scrapy.pipelines.images import ImagesPipelinefrom zcool import settingsimport osimport reclass ZcoolPipeline (ImagesPipeline ): def get_media_requests (self, item, info ): media_requests = super (ZcoolPipeline, self ).get_media_requests(item,info) for media_request in media_requests: media_request.item = item return media_requests def file_path (self, request, response=None , info=None , *, item=None ): origin_path = super (ZcoolPipeline, self ).file_path(request, response, info) title = request.item['title' ] title = re.sub(r'[\\/:\*\?"<>\|]' ,"" ,title) save_path = os.path.join(settings.IMAGES_STORE,title) image_name = origin_path.replace("full/" ,"" ) return os.path.join(save_path,image_name)

settings.py1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 BOT_NAME = 'zcool' SPIDER_MODULES = ['zcool.spiders' ] NEWSPIDER_MODULE = 'zcool.spiders' ROBOTSTXT_OBEY = False DEFAULT_REQUEST_HEADERS = { 'Accept' : 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8' , 'Accept-Language' : 'en' , 'User-Agent' : '我的user-agent' } ITEM_PIPELINES = { 'zcool.pipelines.ZcoolPipeline' : 300 , } import osIMAGES_STORE = os.path.join(os.path.dirname(os.path.dirname(__file__)),'images' )